As libx264 has so many presets and tunes, I was curious how they all related one to another when it comes to encode video info H.264. I was more interested in single pass encoding for live video, so the measurements are respectively for this mode of operation with encoder running in CRF (constant rate factor, X264_RC_CRF).

So I took Robotica_1080.wmv HD video in 1440×1080 resolution and batch-transcoded into H.264 using libx264 (build 128) in various modes operation:

- Presets: “ultrafast”, “superfast”, “veryfast”, “faster”, “fast”, “medium”, “slow”, “slower”, “veryslow”

- Tunes: “film”, “animation”, “grain”, “stillimage”, “psnr”, “ssim”, “fastdecode”, “zerolatency”, “touhou”

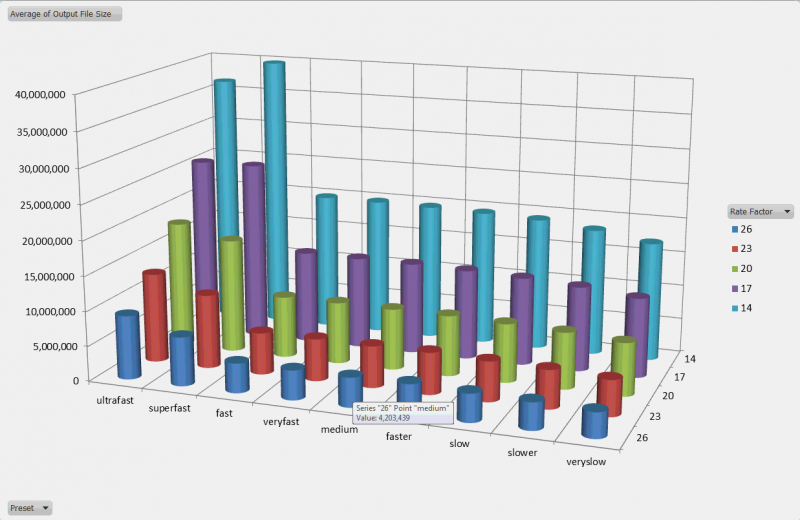

- CRFs: 14, 17, 20, 23, 26

It is worth mentioning that libx264 does EXCELLENT job in transcoding in terms of performance. Transcoding operation was a DirectShow graph of the following topology:

Some measurements are obviously not quite accurate because not only encoding time counts, WMV decoding time counts also etc. Still this should give a good idea how modes stand side by side one with another.

For every transcoding run I have the following values (Excel spreadsheet attached below):

- Processor Time: number of processor-milliseconds spent on the transcoding; I was measuring in 8 core system, so with 100% load processor time could be up to eight times higher than Elapsed Time (below) provided that all cores were used in full

- Elapsed Time: milliseconds spent on the transcoding; regardless of how many actual cores were in use, because original clip is 20 seconds long everything below that is faster than realtime processing

- Output File Size: size of resulting MP4 video only file, some headers count as well however it is obviously mostly payload data; for a 20 seconds clip, 20 MB is 8 mbit/s bitrate

Another derivative value is:

- Processor Time/Elapsed Time: which shows fullness of use of multicore system; some modes are clearly not using all available cores, while other do

Let us start watching pictures.

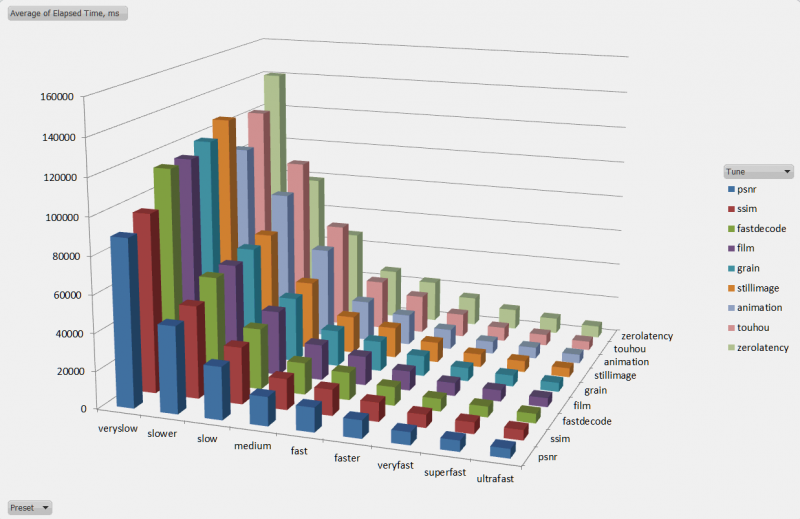

Average Elapsed Time for Preset/Tune (covers runs with different rate factors) shows that slow+ modes take exponentially more time for encoding. psnr and ssim tunes do transcoding slightly faster, while zerolatency tune is the most expensive.

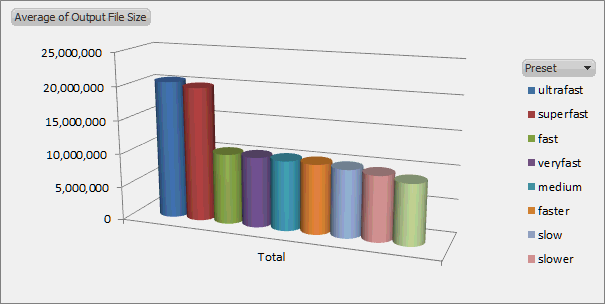

ultrafast and superfast presets produced significantly larger files, about 2x as large as other presets.

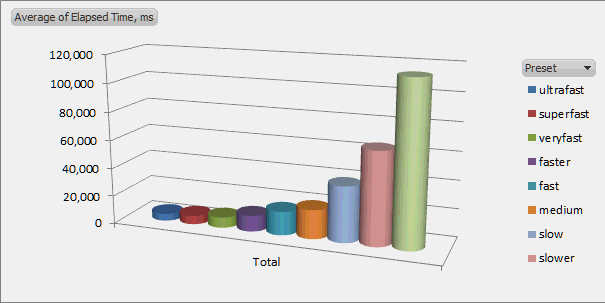

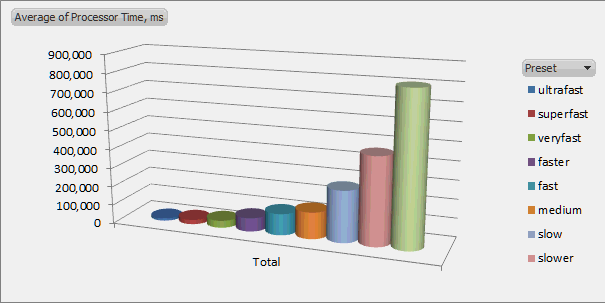

Once again exponential scale of Elapsed Time, and similar Processor Time chart:

It is worth mentioning that fastest presets are not using all CPU cores. Apart from being faster on their own, they leave some CPU time for other processing which can be useful for live encoding applications, and those processing multiple streams at once.

And finally detailed file size dependency from preset and CRF rate. As we already discovered, ultrafast and superfast produce larger stream, while output of other modes not so much differ (within a few percent, mostly on the slowest end). A step in rate factor of three gives about 0.7x decrease in amount of produces bytes.

More fun charts can be obtained from the attached .XLS file.

Download links: